3. Introduction#

This article covers:

Precision

Recall

F1 Score

4. Motivation#

Suppose we are tasked with detecting anomalies in the following dataset.

import numpy as np

import matplotlib.pyplot as plt

n = 200

f = 4 # Frequency

x = np.cos(np.linspace(0, 2 * f * np.pi, n))

x[50] = 2

x[75] = -1.5

y = np.zeros(n)

y[50] = 1

y[75] = 1

outlier_pos = np.argwhere(y)[:, 0]

fig, ax = plt.subplots()

ax.plot(x)

ax.scatter(outlier_pos, x[outlier_pos], color="tab:orange", label="outliers")

ax.legend()

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[1], line 1

----> 1 import numpy as np

2 import matplotlib.pyplot as plt

4 n = 200

ModuleNotFoundError: No module named 'numpy'

Our dataset x has two outliers. Let us use a simple threshold to find these outliers. A threshold of 1.75 means our model will only capture one of the outliers.

def find_outliers(x, threshold=1.5):

return (x >= threshold) | (x <= - threshold)

y_hat = find_outliers(x, 1.75)

print("Number of outliers found:", sum(y_hat))

Number of outliers found: 1

Now let us calculate the accuracy of our predictions.

(y == y_hat).mean()

0.995

The model is 99.5% accuracy. But since this is an outlier detection problem, we know that outliers are going to be rare so have a model that can find the not anomalous points is not very useful.

In fact, in this example, if our model simply predicted not anomalous all the time, then it would have an accuract of 99% since there are 200 data points and 2 anomalies. This highlights the limitations of using accuracy in some problems. What we can use instead is precision and recall.

4.1. Precision#

Precision is a measure of the proportion of predicted positives that were actually true positives.

from IPython.core.display import Image

from IPython.display import display

display(Image(url='Images/precision.png', width=200))

The formula for precisions is:

Let us calculate precision for our example.

tp = ((y == 1) & (y_hat == 1)).sum()

fp = ((y == 0) & (y_hat == 1)).sum()

print(f"TP: {tp}, FP: {fp}")

TP: 1, FP: 0

So, the precisions is:

precision = tp / (tp + fp)

print(f"Precision: {precision}")

Precision: 1.0

So, the precision higher than the accuracy. This metric still misses the fact that one of the true positives has been missed. This is where recall comes in.

4.2. Recall#

Recall is the proportion of actuall positives that were predicted to be positive. It involes true positives and false negatives.

from IPython.core.display import Image

from IPython.display import display

display(Image(url='Images/recall.png', width=200))

The formula for recall is:

Let us calculate recall for our example.

tp = ((y == 1) & (y_hat == 1)).sum()

fn = ((y == 1) & (y_hat == 0)).sum()

recall = tp / (tp + fn)

print(recall)

0.5

Our model detect one anomaly correctly but detected the other incorrectly to recall reflects reflects that property well.

Recall does not take into account false positives though which may be important. F1 score combines both precision and recall.

4.3. F1 Score#

F1 score is the harmonic mean of precision and recall. We will discuss the harmonic mean later on but for now let create our own version of F1 score using the arithmetic mean (add up your numbers and divide by how many there are). So, the formula for our version of F1 score which we will call \(\hat{F1}\) is:

Let us calculate \(\hat{F1}\) for our model.

tp = ((y == 1) & (y_hat == 1)).sum()

fp = ((y == 0) & (y_hat == 1)).sum()

fn = ((y == 1) & (y_hat == 0)).sum()

precision = tp / (tp + fp)

recall = tp / (tp + fn)

f1_hat = (precision + recall) / 2

print(f"F1 hat: {f1_hat}")

F1 hat 0.75

\(\hat{F1}\) is slightly higher than recall, so our score has been increased because the model did not predict any false positives.

5. F1 Score#

F1 score is a method for quantifying the performance of a model. It is typically used for binarcy classification but F1 score can be extended to multiclass problems as well.

%pip install numpy scikit-learn matplotlib

Requirement already satisfied: numpy in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (1.26.4)

Requirement already satisfied: scikit-learn in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (1.5.0)

Requirement already satisfied: matplotlib in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (3.8.3)

Requirement already satisfied: scipy>=1.6.0 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from scikit-learn) (1.13.1)

Requirement already satisfied: joblib>=1.2.0 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from scikit-learn) (1.4.2)

Requirement already satisfied: threadpoolctl>=3.1.0 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from scikit-learn) (3.5.0)

Requirement already satisfied: cycler>=0.10 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from matplotlib) (0.12.1)

Requirement already satisfied: kiwisolver>=1.3.1 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from matplotlib) (1.4.5)

Requirement already satisfied: pyparsing>=2.3.1 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from matplotlib) (3.1.2)

Requirement already satisfied: python-dateutil>=2.7 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from matplotlib) (2.9.0.post0)

Requirement already satisfied: fonttools>=4.22.0 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from matplotlib) (4.49.0)

Requirement already satisfied: packaging>=20.0 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from matplotlib) (24.0)

Requirement already satisfied: pillow>=8 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from matplotlib) (10.2.0)

Requirement already satisfied: contourpy>=1.0.1 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from matplotlib) (1.2.0)

Requirement already satisfied: six>=1.5 in /home/alex/documents/notes/.venv/lib/python3.10/site-packages (from python-dateutil>=2.7->matplotlib) (1.16.0)

Note: you may need to restart the kernel to use updated packages.

import numpy as np

import matplotlib.pyplot as plt

from sklearn import linear_model

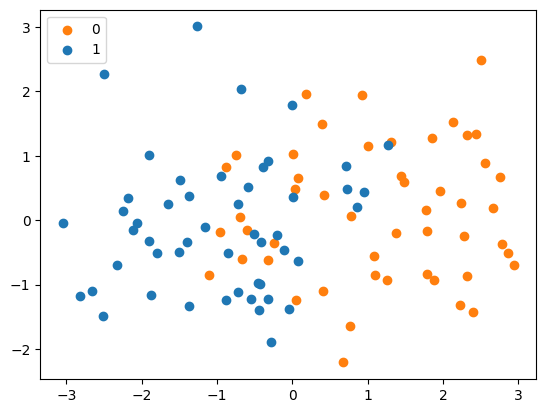

Let us create a toy dataset classifying two gaussian distributions.

n = 50

x = np.concatenate([

np.random.normal([1, 0], 1, size=(n, 2)),

np.random.normal([-1, 0], 1, size=(n, 2))

])

y = np.asarray([0] * n + [1] * n)

Plot x and y.

plt.scatter(x[:n, 0], x[:n, 1], color="tab:orange", label=0)

plt.scatter(x[n:, 0], x[n:, 1], color="tab:blue", label=1)

plt.legend()

<matplotlib.legend.Legend at 0x7f8815f77790>

Let us train a logistic regression model on this data.

model = linear_model.LogisticRegression()

model.fit(x, y)

y_hat = model.predict(x)

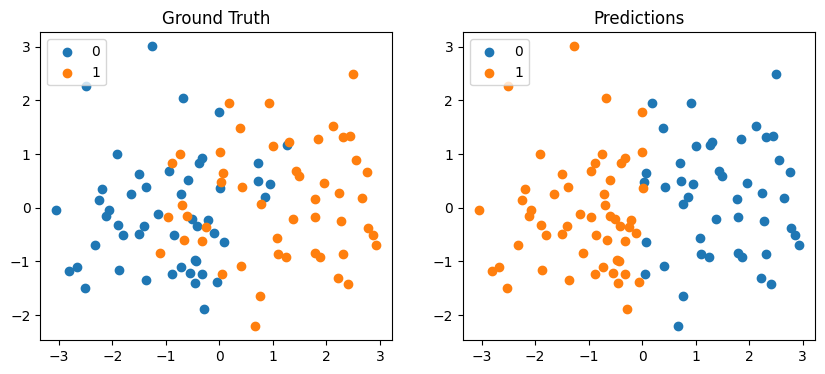

Plot the predictions and the true labels.

fig, ax = plt.subplots(1, 2, figsize=(10, 4))

ax[0].scatter(x[n:, 0], x[n:, 1], label=0)

ax[0].scatter(x[:n, 0], x[:n, 1], label=1)

ax[0].legend()

ax[0].set_title("Ground Truth")

ax[1].scatter(x[y_hat == 0, 0], x[y_hat == 0, 1], label=0)

ax[1].scatter(x[y_hat == 1, 0], x[y_hat == 1, 1], label=1)

ax[1].legend()

ax[1].set_title("Predictions")

Text(0.5, 1.0, 'Predictions')

The model clearly gets several predictions wrong. We can break our predictions, y_hat, down into for categories:

y_hatis 1 andy_hatis 1 (true positive).y_hatis 1 andy_hatis 0 (false negative).y_hatis 0 andy_hatis 1 (false positive).y_hatis 0 andy_hatis 0 (true negative).

Let us calculate these quantitites for our model.

true_pos = np.sum((y == 1) & (y_hat == 1))

true_neg = np.sum((y == 0) & (y_hat == 0))

false_pos = np.sum((y == 0) & (y_hat == 1))

false_neg = np.sum((y == 1) & (y_hat == 0))

print(f"true_pos: {true_pos}, true_neg: {true_neg}, false_pos: {false_pos}, false_neg: {false_neg}")

true_pos: 44, true_neg: 40, false_pos: 10, false_neg: 6

Our model gives mostly true positives and true negatives which is good. When assessing the quality of the model, our appetite for false positives and false negatives may depend on our application. For example:

If 1 means a patients has a disease and 0 means they don’t, we may be more willing to accept false positives than false negatives.

6. Precision and Recall#

Precisions and recall is a way of comparing the actual results with the predicted results.

Precision is the proportion of positive predictions that were actually positive.

Recall is the proportion of actual positives that were predicted to be positive.

Let us write functions to calculate precision and recall.

def calculate_precision(y, y_hat):

true_pos = np.sum((y == 1) & (y_hat == 1))

false_pos = np.sum((y == 0) & (y_hat == 1))

return true_pos / (true_pos + false_pos)

def calculate_recall(y, y_hat):

true_pos = np.sum((y == 1) & (y_hat == 1))

false_neg = np.sum((y == 1) & (y_hat == 0))

return true_pos / (true_pos + false_neg)

precision = calculate_precision(y, y_hat)

recall = calculate_recall(y, y_hat)

print(f"Precision: {precision}, Recall: {recall}")

Precision: 0.8148148148148148, Recall: 0.88

Precisions would be 1 if we had 0 false positives. Recall would be 1 if we had 0 false negatives.

7. F1 Score#

F1 score is the harmonic mean of precision and recall. Let us first recall the definition of harmonic mean. Suppose we have a sequence of numbers \(x_1, x_2, \dots, x_n\). The harmonic mean of this set of numbers is,

So, the harmonic mean of precision and recall (F1 score) is,

Let us write a function for calculate the F1 score.

def calculate_f1(y, y_hat):

recall = calculate_recall(y, y_hat)

precision = calculate_precision(y, y_hat)

return 2 * (precision * recall) / (precision + recall)

f1_score = calculate_f1(y, y_hat)

print(f"F1 score: {f1_score}")

F1 score: 0.8461538461538461

Why is the harmonic mean used when calculating f1 score as opposed to the arithmetic mean?